In this blog post, I’ll introduce you to Zuul, tell you a little about API gateways, then dive into Spring Cloud Zuul, the specific implementation module we chose. I’ll also describe the three buckets that the various components of our implementation fall into and explain how we tune and configure them. I’ll finish by discussing the Zuul-related metrics we collect and our future plans for the technology. And I’ll sprinkle some code samples throughout to show you how we’ve approached specific problems.

What is Zuul?

Zuul is Netflix’s Java-based API gateway. Netflix has used Zuul for all of its externally facing APIs for the past several years, so it’s battle-tested.

Internally, Zuul processes a set of filters for every request. These are determined at runtime and pass information via a “Request Context.” These filters generally fall into one of four types:

- PRE – Invoked before traffic is sent to upstreams, the PRE filter is typically used to determine upstreams and/or to inject metadata.

- ROUTE – Invoked after an upstream has been chosen, the ROUTE filter typically handles the actual proxy operation.

- POST – Invoked after an upstream has begun to return a response, the POST filter typically does things like mangles response headers or audits.

- ERROR – Invoked when a fatal error occurs in the processing of the filter chain, the ERROR filter is typically used to present the end user with a “pretty” error message or UI.

As I write this, Zuul 1.x is the current OSS version. Zuul 1.x is Servlet 2.x-based and, therefore, is blocking in nature. Each request into Zuul occupies a single container thread, which limits the number of concurrent requests that can be handled to the number of worker threads available in your app container.

Zuul 2.x has been designed to address this through the use of Reactive Streams to deal with asynchronous processing of requests. For more information, see: Zuul 2 – Netflix TechBlog – Medium.

What are API gateways?

We’ve established that Zuul is an API gateway, but that raises another question: What are API gateways? An API gateway is a layer 7 (HTTP) router that acts as a reverse proxy for upstream services that reside inside your platform. API gateways are typically configured to route traffic based on URI paths and have become especially popular in the microservices world because exposing potentially hundreds of services to the Internet is both a security nightmare and operationally difficult. With an API gateway, one simply exposes and scales a single collection of services (the API gateway) and updates the API gateway’s configuration whenever a new upstream should be exposed externally.

This provides three advantages:

- Centralized logic. Things like authn or authz can be dealt with in one location.

- A single point of ingress. All inbound traffic funnels through a single point of entry, which simplifies the configuration of things like firewall rules and access logging.

- Trace injection. Having a single point of entry makes metadata injection easy. Things like trace IDs can be generated, injected, and then audited in your app router.

Using an API gateway does, however, have one main disadvantage: It adds an extra hop. This is because traffic must be processed first by the app router before it is passed along to upstreams. The advantages vastly outweigh the disadvantages, however.

Spring Cloud Zuul

As part of its Netflix integrations, Spring Cloud provides a Zuul integration module. It provides a fairly comprehensive set of Spring-friendly configuration points and base filters that allow you to get up and running with Spring Boot + Zuul quickly. Because we use Spring Boot in production, Spring Cloud’s spring-cloud-starter-netflix-zuul module was a natural choice for us to get things off the ground.

Architectural overview

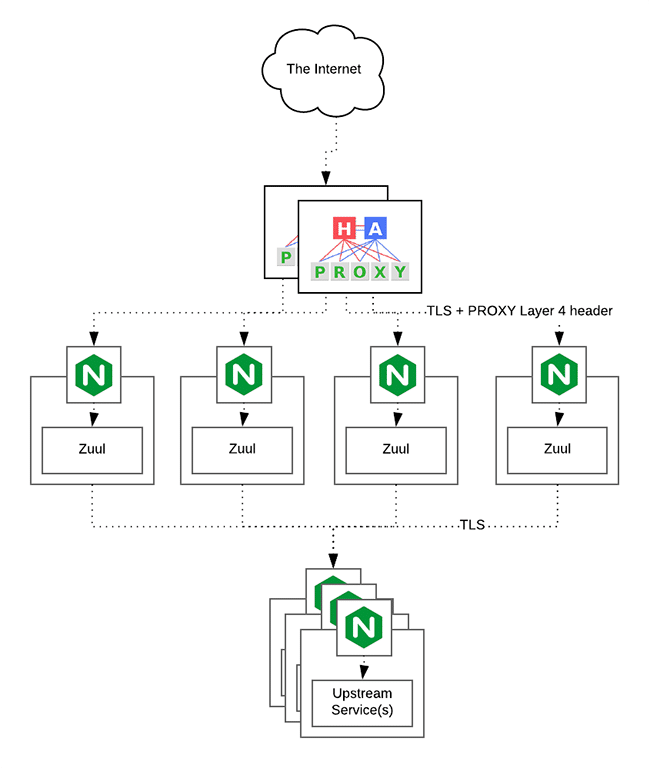

The various components of our implementation are organized into three buckets:

- HAProxy performs load balancing (layer 4 + proxy) across our Zuul nodes. HA is provided via Keepalived.

- NGINX performs static resource caching, TLS termination, connection keep-alives, Gzipping, and other services.

- Zuul is the API gateway itself.

The diagram shows how these components are connected.

The components of Anaplan’s Zuul implementation are organized into three buckets: HAProxy, Zuul, and target services.

Here are some things to consider about the arrangement we are using:

- We didn’t use Ribbon/Hystrix. Because we plan to integrate Envoy and Consul in the near future, we decided to delegate load balancing and discovery to them. Another blog post on that project will follow.

- To minimize TLS hops, we opted for layer 4 (TCP) load balancing in HAProxy.

- The proxy protocol was enabled to provide source IP address pass-through to Zuul instances.

- KeepaliveD was used in active/active mode to provide failover if an HAProxy instance is lost.

- An NGINX is paired with each Zuul instance. This provides automatic horizontal scaling and minimizes network overhead when reverse proxying to and from locally running Zuul instances.

Tuning and configuration

HAProxy

Because HAProxy (or HA-P) is the first thing that receives traffic in your stack, it must be able to handle all of your API traffic. It needs to balance loads between backends and to copy bytes between the client and your Zuul instances. Because we’ve configured NGINX to be fairly aggressive in terms of keepalives, this translates to lots of extra connections that HA-P needs to deal with.

As for the actual tuning of HA-P, we followed a few write-ups we found online and then ran benchmarks after each tweak to confirm the changes. One blog post we found especially useful was How we fine-tuned HAProxy to achieve 2,000,000 concurrent SSL connections by Sachin Malhotra. We didn’t intend to support two million concurrent connections from day one, but it’s good to know that we can with our current setup.

NGINX

Our NGINX is mainly optimized for keeping connections and TLS sessions alive for a decent amount of time. We also cache our static content (assuming it’s properly set up for cache busting) in NGINX, which vastly decreases the number of requests handled by upstreams. Here’s a sample NGINX configuration used by a given Zuul instance:

upstream local_zuul {

# The keepalive parameter sets the maximum number of idle keepalive connections

# to upstream servers that are preserved in the cache of each worker process. When

# this number is exceeded, the least recently used connections are closed.

keepalive 1000;

server 127.0.0.1:<%= @server_port %>;

}

# Number of requests allowed per keep-alive connection (nginx default is 100)

keepalive_requests 10000;

server {

listen <%= @ssl_proxy_port %> proxy_protocol;

server_name <%= @external_name %>;

set $yeti_upstream "http://local_zuul";

.... SSL and cached paths here ....

# Catch everything and proxy it along to Zuul...

location / {

proxy_pass $yeti_upstream;

...

# Default is HTTP/1, keepalive is only enabled in HTTP/1.1

proxy_http_version 1.1;

# Remove the Connection header if the client sends it,

# it could be "close" to close a keepalive connection

proxy_set_header Connection "";

# Don't buffer requests locally before forwarding to proxy...

proxy_request_buffering off;

}

}

Spring Cloud Zuul

Out of the box, Spring Zuul worked well in initial testing. But through additional testing, we discovered that some default filters performed actions-such as buffering the entire contents of a request body into memory to take action based on it-that caused OOM errors when uploading fairly large (greater than 1 GB) request bodies. To deal with this issue, we extended the provided ZuulProxyConfiguration config class and overrode a few of the default filters that were responsible for doing most of the buffering with this code:

/**

* This is a customized version of `ZuulProxyConfiguration` which allows us to override a few

* bits of the default one's functionality

*

*/

@Configuration

@EnableCircuitBreaker

@EnableDiscoveryClient

public class MyZuulConfiguration extends ZuulProxyConfiguration {

/**

* Disable these filters, as they attempt to either parse the entire request body, or end up wrapping the

* request in a wrapper which attempts to parse the body - either of which is a BAD idea for large request bodies

*/

@Bean

@Override

public DebugFilter debugFilter() {

return new DebugFilter() {

@Override

public boolean shouldFilter() {

return false;

}

};

}

@Bean

@Override

public Servlet30WrapperFilter servlet30WrapperFilter() {

return new Servlet30WrapperFilter() {

@Override

public boolean shouldFilter() {

return false;

}

};

}

@Bean

@Override

public FormBodyWrapperFilter formBodyWrapperFilter() {

return new FormBodyWrapperFilter() {

@Override

public boolean shouldFilter() {

return false;

}

};

}

/**

* This was actually only needed before our patch was merged into master

**/

@Bean

@Override

public SendResponseFilter sendResponseFilter() {

return new InputStreamClosingSendResponsePostFilter();

}

}

We also found that upstream connections in Spring Zuul weren’t being closed properly. The SendResponseFilter, as provided, was built in such a way that it would wait for the end of an InputStream from a proxied request to close automatically, as opposed to closing it in a finally block. As the close() operation actually just returns the underlying connection to a connection pool, the original code relied on the underlying InputStream proxy to do the right thing.

We found, however, that in certain situations (that only happened in production), an error could occur while sending data back to the client. In this case, a partially read InputStream (connected to the upstream service) would be left dangling, which caused a connection pool leak.

Here’s the patch we originally added to our Zuul implementation. We also submitted the patch, and it has been merged into 1.4.x of Spring Boot Zuul.

/**

* The whole point of this filter is to ensure that the InputStream coming out of the HttpClient

* is ALWAYS closed. The super class never calls close() under the assumption that the stream will

* always be read to the end, even though it won't in exceptional cases

*

*/

public class InputStreamClosingSendResponsePostFilter extends SendResponseFilter {

@Override

public String filterType() {

return "post";

}

@Override

public int filterOrder() {

return 1000;

}

@Override

public Object run() {

try {

return super.run();

}

finally {

// Simply force a close for every request...

doCloseSourceInputStream();

}

}

void doCloseSourceInputStream() {

RequestContext context = RequestContext.getCurrentContext();

InputStream inputStream = context.getResponseDataStream();

if (inputStream != null) {

try {

inputStream.close();

} catch (IOException e) {

// This should actually never happen

log.error("Failed to close wrapped stream", e);

}

}

}

}

Metrics, metrics, and more metrics

One thing I can’t stress enough is the important role of metrics in a distributed cloud platform. This tenet applies to any system but is especially important for a Zuul gateway, as it is responsible for all of your ingress traffic. It’s incredibly important to have as much information as possible when diagnosing problems that may affect some, or all, of your customers.

We started collecting the metrics we wanted by instrumenting the upstream request-pooling mechanism, as well as overall request-processing information. Both of these are critically important when determining the health of a given Zuul instance. The typical indicator that a Zuul is falling over is the number of concurrent handling requests it’s processing. If the number of requests reaches the number of threads available to Tomcat, new requests will be queued until threads become available. This is because Zuul 1.x is blocking in nature.

We expose this metric and others via a gauge and counter service provided by Spring Boot Actuator’s Metrics facility. Tracking of current requests was accomplished through a simple servlet filter using this code:

/**

*

* Keeps track of and notifies the CounterService the number of requests that are currently being handled

*

*/

@Order(Ordered.HIGHEST_PRECEDENCE)

public class MyMetricsFilter extends OncePerRequestFilter {

static final String METRIC_HANDLING_NAME = "meter.request.handling",

METRIC_MAX_NAME = "meter.request.max";

private CounterService counterService;

private GaugeService gaugeService;

private ServerProperties serverProperties;

private AtomicBoolean counterInitialized = new AtomicBoolean(false);

public YetiMetricsFilter(CounterService counterService, GaugeService gaugeService,

ServerProperties serverProperties) {

this.counterService = counterService;

this.gaugeService = gaugeService;

this.serverProperties = serverProperties;

}

@Override

protected void doFilterInternal(HttpServletRequest request, HttpServletResponse response,

FilterChain filterChain) throws ServletException, IOException {

try {

/**

* Adding this sync block because of the fact that the ServoCounterService

* isn't thread-safe and there's a possibility that we can init the counter twice...

*/

if (!counterInitialized.get()) {

synchronized (this) {

counterService.increment(METRIC_HANDLING_NAME);

counterInitialized.set(true);

}

}

else {

counterService.increment(METRIC_HANDLING_NAME);

}

gaugeService.submit(METRIC_MAX_NAME, serverProperties.getTomcat().getMaxThreads());

filterChain.doFilter(request, response);

}

finally {

counterService.decrement(METRIC_HANDLING_NAME);

}

}

}

We had to do a bit more work to get the connection pool metrics we wanted. Our basic idea was to create a timer that would periodically introspect the connection pool, generating per-route metrics. These, in turn, were exposed via an actuator using this code:

/**

* We're overriding the base SimpleHostRoutingFilter in order

* to provide access to the underlying HttpConnectionPool...

*/

public class MetricsAwareSimpleHostRoutingFilter extends BaseZuulFilter {

....

@PostConstruct

public void init() {

this.httpClient = newClient(); // This builds our client

// Metrics are enabled in prod

if (metricsEnabled) {

connectionManagerTimer.schedule(new TimerTask() {

@Override

public void run() {

if (connectionManager != null) {

// Just report on all of our current HttpRoutes...

connectionManager.getRoutes().forEach((route) -> {

PoolStats stats = connectionManager.getStats(route);

logRouteStats(route, stats);

});

// Report the total stats...

PoolStats stats = connectionManager.getTotalStats();

gaugeService.submit(ROUTE_POOL_METRIC_PREFIX + "max", stats.getMax());

gaugeService.submit(ROUTE_POOL_METRIC_PREFIX + "leased", stats.getLeased());

gaugeService.submit(ROUTE_POOL_METRIC_PREFIX + "available", stats.getAvailable());

gaugeService.submit(ROUTE_POOL_METRIC_PREFIX + "pending", stats.getPending());

}

}

}, 2000, updateIntervalMs /** This val set via a config property... **/);

}

}

private void logRouteStats(HttpRoute route, PoolStats stats) {

String gaugeKey = getGaugeKey(route.getTargetHost());

gaugeService.submit(gaugeKey + ".available", stats.getAvailable());

gaugeService.submit(gaugeKey + ".leased", stats.getLeased());

gaugeService.submit(gaugeKey + ".max", stats.getMax());

gaugeService.submit(gaugeKey + ".total", stats.getAvailable() + stats.getLeased());

}

private String getGaugeKey(HttpHost host) {

return ROUTE_METRIC_PREFIX + host.getSchemeName() + "." +

sanitizeHostName(host.getHostName()) + "." + host.getPort();

}

private String sanitizeHostName(String hostName) {

return HOST_SANITIZING_FILTER.matcher(hostName).replaceAll("_");

}

....

}

After we’ve generated and exposed all of these metrics, we pipe everything into Splunk where it’s aggregated and displayed on dashboards that track the status of HAProxy, NGINX, and Zuul.

Future plans

We see specific changes in these areas:

- Service discovery. We plan to integrate Consul to provide service discovery for upstreams.

- Load balancing. Load balancing will move to Envoy in the near future.

- Dynamic upstream route configuration. Routes are configured via Chef today; however, they should ideally be dynamically configured via Consul registration of upstream services.

- Non-blocking implementation. This will overcome the blocking nature of Zuul 1.x.